PAMELA - Objectives and Research Programme

Objectives

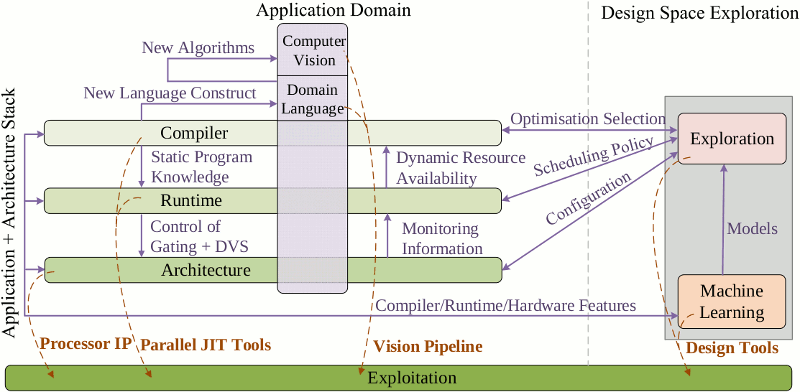

This Programme's approach is to research, innovate and develop core technology for embedded to server scale manycore computing. It will take a holistic, integrated, end-to-end approach, developing working FPGA and silicon prototypes. It will deliver real processor IP based on emerging applications with the intervening software stack. The technical objectives of the project are to:

- Deliver next generation mobile 3D scene understanding and a new generic computer vision pipeline API.

- Research and innovate Domain Specific Languages that enable exploitation of application structure on future heterogenous many-core platforms.

- Automate the compiler mapping of parallel programs to ever-changing systems using machine learning.

- Innovate by creating a smart, lean, energy-conscious virtualisation layer.

- Co-design a heterogenous many-core architecture to achieve new levels of energy-efficient performance.

- Automate the exploration of the heterogeneous many-core design space.

- Deliver economic advantage for existing UK companies and new spinouts.

Research Programme

Domain specific language (Paul Kelly).

The objective of this project is the design of a parameterised task-parallel programming model, and the mapping onto it of a wide range of applications via a domain-specific language (DSL). In particular we will use the vision application as a major driver. Our challenge is to identify a suite of problems and applicable parallel algorithms, and to create a software framework that supports their composition in a flexible way that promotes effective task-driven engineering of novel applications. This DSL will be realised as an API callable from common languages such as Java, C and C++. The key function of this layer is to capture the scope for adaptation and selection of implementation alternatives, and to pass an abstract characterisation of code synthesis choices, and their performance consequences, down to lower code generation and architecture configuration layers. In the area of Computer Vision, our starting point is the initial frame-setting work of the Khronos Computer Vision Working Group (http://www.khronos.org/vision), at which we are represented. This approach opens an entirely new space of compilation optimisations, runtime policies and ultimately defines the type of computer architecture we want to build. It also provides a structure for exploring new algorithms that are fed back to the computer vision domain. This forms a vertical slice through the programme and interacts with all projects.Compilation (Mike O'Boyle).

This project aims at developing a compiler-based, automatic and portable approach to partitioning and mapping parallel programs to any heterogeneous many-core processor. Our approach will be applicable to any parallel program, whether this be generated by semi-automatic parallelisation tools or by-hand. However, we will particularly focus on exploiting the semantic information passed from the domain specific languages and specialise the code generated to a configurable architecture space. We will also develop energy analysis to provide ahead-of-time information to the runtime on power saving opportunities. There are many decisions to be made when partitioning and mapping a parallel program to a platform. These include determining how much and what type of the potential parallelism should be exploited, the number and type of specialised processors to use, how memory accesses should be implemented etc. Given this context, there are two broad research areas we wish to investigate in mapping to heterogeneous cores. The first area is concerned with determining the best mapping of an application to any hardware configuration. It tackles the issue of long-term design adaptation of applications to hardware. The second area focuses on more short-term runtime adaptation due to either changing data input or external workload. This project relies on the design space exploration project to help learn and predict good mapping decisions. One of the key challenges is developing specialised code for the vision applications on specialised hardware and passing useful energy information to the runtime.Runtime (Mikel Lujan).

This project is committed to offering a breakthrough on reducing power for heterogeneous many-core platforms via runtime adaptation with innovations on virtualization technologies. By using virtualization techniques we provide solutions to a large user base. Given the characteristics of the problem, we consider that having control of scheduling, memory allocation and data placement processes, and being able to regenerate code during execution are initial necessary actuators for our control system and is functionally already under the control of Managed Runtime Environments (MREs). It is therefore tightly integrated with the language and compiler projects. As a first step, we will consider how to adapt MREs to be able to execute on heterogeneous multi-core platforms where there is a lack of cache coherence and shared address space. We will extend this work to interact with thearchitecture project to define a new set of power performance counters so that we can explore the range of power optimizations available. We want to understand how to build MREs where the memory management and scheduling have a closer collaboration with the underlying system. Once we have such coordination we will explore the range of optimizations to optimize power, by focusing on the handling of data. Finally, we will consider the evaluation of the MRE and many-core architecture and reconstruct it for the specialised requirements of vision applications.Architecture (Steve Furber).

The philosophy of many-core architecture design revolves around issues such as memory hierarchy, networks-on-chip, processing resources, energy management, process variability and reliability. The objective of this project is the design of an innovative parameterisable heterogeneouis many-core devices that can be configured to meet an application's specific requirements. Knowledge of these requirements is passed via the domain language, compiler and runtime. The architecture will provide energy and resource monitoring feedback to the runtime system allowing the software to adapt to the hardware state. Architecture exploration will use a high-level ESL (Electronic System-Level) design environment such as BlueSpec to provide the appropriate level of design abstraction. The architecture will be parameterised with respect to the number of cores, the interconnect bandwidth and latency, memory hierarchy, and support for the type and number of different core types so that at design time the Design Space Exploration project has a significant number of control points to use for design optimisation. Clearly once implemented on silicon some of these degrees of flexibility will have been reduced, but the runtime system will still be able to control the number of active units and their supply voltages and clock frequencies (for DVFS).Design-space Exploration (Nigel Topham).

Many-core systems are inherently complex, creating many challenges both at design time and run time. Experience even with single-core systems has shown that such system complexity translates to a vast array of possible designs, which together define the design space. Within the design spaces will reside good solutions and bad - the task of finding the best solutions is fundamentally infeasible in a traditional manual design process based on empirical know-how. The overall objective of this project is understand how to find good solutions for many-core systems using approaches that rely on automated search of the design space coupled with learnt models of similar design spaces. A key enabling technology is Machine Learning. This provide a rigorous methodology to search and extract structure that can be transferred and reused in unseen settings. This methodology will be employed on a micro-scale within each sub-project of the programme e.g. evaluation of a compiler optimisation or selection of hardware cache-policy. More boldly it will be used across the project to find the best design slice for the vision applications; in other words the software stack and hardware best suited for delivering the vision application. While the vision application provides an integrating activity across the project, design-space exploration provides an integrating methodology.Computer Vision (Andrew Davison).

The core challenge here is to pin down the common fundamentals that underlie a large class of computer vision algorithms under the umbrella of real-time 3D scene understanding. This is the capability which will enable the applications people to achieve what have always been expected from sensing equipped AI - robotic devices and systems which can interact fully and safely with normal human environments to perform widely useful tasks. A key reason that such applications have not yet emerged is simply that the robust and real-time perception of the complex everyday world that they require has simply been too difficult to achieve, algorithmically and computationally. This has been especially true in the domain of commodity-level sensing and computing hardware which is where the potential for real world-changing impact lies. This project will therefore drive a challenging integrating activity in the programme, interacting with each system layer to generate an architected solution for next generation vision.

Purpose and aims

Parallelism is for the masses, no longer an HPC issue but touches every application developer. We live in turbulent times where the fundamental way we build and use computers is undergoing radical change providing an opportunity for UK researchers to have a significant societal and commercial impact. With the rapid rise of multi-cores, parallelism has moved from a niche HPC concern to mainstream computing. It is no longer a highend elite endeavour performed by expert programmers but something that touches every software developer. From mobile phones to data centres, the common denominator is many-core processors. Due to the relentless scale of commoditisation, processor architecture evolution is driven by large volume markets. Today's supercomputers are based on desktop micro-processors, tomorrow's will be based on embedded IP.

Emerging applications will be about engagement with the environment. Concurrent with the shift in how computer systems are built has been the change in how we use them. They are no longer devices simply used at work, but pervade our daily lives. Computing platforms have moved from the desktop and departmental server to the mobile device and cloud. Emerging applications will be based around how users interact in such an environment; harnessing new types of data, e.g. visual and GPS combined with large scale cloud processing. Programming such machines is an urgent challenge. While we can use a small number of cores for different house-keeping activities, as we scale to many-cores there is no clear way to utilise such systems. A research programme that can solve this problem will unlock massive potential.

Processor-chip a thing of the past. The future is multi-IP (many UK) system-on-chip. Energy, power density and thermal limits have forced us into the many-core era. It will push us further into the era of dark-silicon where perhaps only 20% of a chip can be powered at a time. In such a setting more of the same, i.e. homogeneity, gives no advantage driving the development of specialised cores and increasingly heterogeneous systems built around distinct IP blocks such as GPUs, DSPs etc. The UK is already a leader in the embedded IP space, we now have the opportunity to take this to the general computing arena.

An end-to-end approach delivering impact. This programme's aim is to research and develop core technology suitable for embedded to data-centre many-core computing. It will take an end to end approach developing real processor IP based on real applications and deliver the intervening software stack. It brings together three of the UK's strongest teams in computer engineering, computer architecture, system software and programming languages alongside a world-class computer vision research team.

Driven by new applications that need to be invented - not more of the same bigger and faster. Too often foundational systems work does not look forward to the applications that shape its context. Instead, we focus on one particular application domain with massive potential: 3D scene understanding. It is poised to effect a radical transformation in the engagement between digital devices and the physical human world. We want to create the computer vision pipeline architecture that can align application requirements with hardware capability, presenting a real challenge for future systems.

Why a programme grant? The challenges of many-cores cannot be addressed by remaining in horizontal academic silos such as hardware, compilers or languages. It equally cannot be tackled via application-driven vertical slices where opportunities for common approaches are lost. To have any impact, we must do both, bringing a multi-disciplinary team to work across the divides and show applicability to real economy-driving applications. Any successful project must actively engage with industry to realise and develop the commercial impact of this work.