Silicon Grand Challenges

This doesn’t really represent my vision (if, indeed, I have any) but it does represent my appreciation of what might be important in the coming years. Additionally, I am not sure whether these represent grand challenges and, furthermore, challenges that are divorced from the underlying Physics.

Personally, I think that the grand challenge (and this is not really a technical challenge) is what we all do when Moore’s Law does, eventually, stop operating and the industry matures. The health of the industry (despite its cyclic nature) is predicated on exponential growth and when/if we no longer have this then we are all in trouble. The optimists might say that something will come along to extend its life or to replace it in some way (at some point the benefit of breaking the dominance of Silicon will offset the cost of so doing) but it will probably be a whole set of other people who are spearheading the work.

Returning slightly nearer to the point in hand, I suppose you might aim to tie in the Silicon grand challenges to the ones developed in Computer Science. So, if you are going to aim to understand the brain or provide memory for life, for example, then you really ought to think about how you are going to implement them in a package as compact as the real thing. Additionally, consider the need to train, replicate, and maintain them. I ask my students what they think we are going to use all of the transistors that they are going to be able to fit on to a chip (independent of whether you can design the devices): do we give all the extra space over to memory; do we have a bigger and more powerful processor; do we have multiple processors; and how do we employ them? There are possible answers, of course (apart from the obvious one that they will need to be so powerful to run Windows 2020) – none of which the students seem to consider: natural language and more intuitive interfaces, for example. These difficult tasks are so far short of the actual requirements implied by the computer science challenge, though, it is difficult to see how the bigger aims might be achieved.

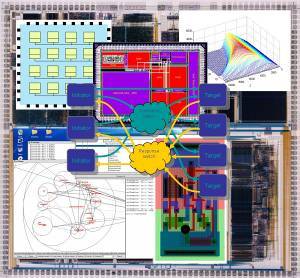

In terms of design, the issue of how to handle the increasing intangibles arising as a consequence of scaling (not least of which is the sheer complexity) will represent a major challenge. It seems a bit trite to say that the problems of designing a 1010 transistor device in a timely and cost-effective way would be enough of a challenge It is clear, however, that as technology scales it will be impossible, either in terms of allotted time or in terms of the complexity of finding the answer, to guarantee that a large system will behave according to the design (poor models, delay uncertainty due to parasitic action, increasing process variation, insufficient coverage on verification, insufficient coverage on manufacturing test, etc.). These problems may have a number of solutions (or ways of finessing the problem, which usually seems to be the case) but the problems may require designs to be inherently robust in the face of these worsening problems. Furthermore, the issue of reasonable yield may also require designers to adopt such measures to maintain cost effective manufacturing: fault tolerance, re-organisation, repair, or redundancy. I suppose the issue, ultimately, is to find a way of trading-off the need for design complexity and efficiency against the degree of redundancy – doing it automatically and doing it sufficiently well to guarantee the product to some defined level for a know cost overhead. I’m not sure that industry and customers will accept more shoddy hardware/software than we currently use: it’s OK in a desktop PC – we learn by experience how to work around problems – but unacceptable in car management system.

All of the other problems accrue with the complexity. Verification is escaping steadily. Ian Philips said that academia seemed to be jumping on the bandwagon trying to solve problems that industry had now rather than the ones they would have. I don’t think that industry is solving the problem; it is merely living with the inconvenience. Jumping on the bandwagon now will, potentially, yield solutions sooner than later. Reading the SIA roadmap, the needs and difficulties keep on getting worse but the potential solutions and approaches are much nearer-term.

In this regard, with respect to how industry views grand challenges, as you heard Ian say, industry has got a severely circumscribed horizon and this seems to be part of the problem. I attended the talk given by Wally Rhines at the IEE earlier this year and, when talking about manufacturing tests and the increasing difficulty he talked of something revolutionary that had given existing techniques a new lease of life. I sat listening, waiting to hear something riveting, as he talked about compressing test vectors. It reminded me of that tune “is that all there is …”. I would be surprised if this were the case but without a long term view it probably is.