The EPSRC Portfolio Award at the University of Manchester

- The Award

-

The Advanced Processor Technologies (APT) group of the Department of Computer Science at the University of Manchester is one of eight university research groups in the UK to have been awarded a 5-Year Portfolio Award by the Engineering and Physical Science Research Council (EPSRC). These awards recognise a research group's high international research profile and successful record in attracting research funding. The photograph shows Stuart Ward, Director Resources EPSRC and the Vice Chancellor, Sir Martin Harris (Right) in the process of signing the Memorandum of Understanding for the Portfolio Award.

The members of the APT group have accumulated many years of experience in the fields of conventional and parallel computer architectures and novel uses of technology to address performance issues with respect to both speed of operation and power consumption.

Current interests within the group include, but are not confined to, the following:

- asynchronous and low power microprocessors.

- design tools for the synthesis and implementation of asynchronous designs.

- parallel computing and dynamic compilation.

- large scale neural network hardware.

- on-chip interconnect for System-on-Chip design

Current Technological Challenges:

The development of digital technology has been driven, to a large extent, by "Moore's Law" which postulates that the number of transistors which can be integrated on to a single silicon chip doubles every 2-3 years. Current microprocessors have exceeded a hundred millions of transistors and memory devices approach one billion.

The challenge to the digital system designer is how to employ this abundance of transistors and overcome the design challenges presented by the possible scale of a future billion transistor system.The response of the APT group to these challenges spreads over a number of fronts:

- Automating the design process as far as possible so that synthesis takes over much of the time and skill consuming tasks of circuit and system design.

- Easing the design process for System-on-Chip (SoC) designs by using an asynchronous Network-on-Chip to connect modules which may be locally synchronous. This addresses the problems of clock distribution across large high speed systems and on-chip delays.

- Exploiting transistor bulk in Chip Multiprocessors and novel Large Scale Neural-Network hardware

- Application of the very low power potential of asynchronous processors to digital signal processing and the emerging ubiquitous computing field such as sensor-nets.

The work undertaken within the Portfolio is detailed below:

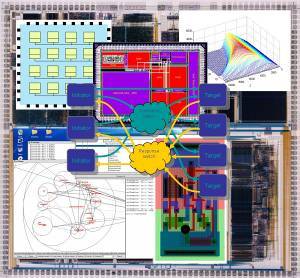

Asynchronous System Design (Network-on-Chip):

An important aspect of the group's work on asynchronous system design has been the development of self-timed on-chip interconnect technologies. The first of these, the MARBLE bus, was developed as part of the DRACO chip development, and won John Bainbridge his PhD and a UK Distinguished Dissertation Award. John then went on to develop CHAIN, a self-timed network-on-chip (NoC) technology that addresses the need for a GALS (globally asynchronous locally synchronous) approach to complex system-on-chip design. Current work within the Portfolio is focussed on developing solutions that will support QoS (Quality-of-Service) on a self-timed NoC.

Asynchronous System Design (Sensor_Nets):

As the capabilities of CMOS VLSI, MEMS and radio communications continue to advance there is a growing interest in industry and academia in sensor nets, which are very small integrated systems each comprising one or more sensors, some digital processing, a radio link and a power source.

Large numbers of sensor nodes can be configured to form ad-hoc networks to communicate sensor data, via multiple 'hops', to a base data collection point. The sensors may be distributed over an individual human (for medical purposes), a building (for environment monitoring, security or earthquake detection), an estuary (for environmental monitoring) or a battlefield (for surveillance and other purposes).

Individual sensor nodes require power and they must either be equipped with a lifetime power source or be capable of scavenging power from their environments. In either case ultra low power consumption is vital and it is here that the very low power potential of asynchronous systems, capable of powering down with minimal current drain, can make a unique contribution.

Asynchronous System Design (Signal Processing):

This concentrates on portable applications which have a highly variable work load with long idle periods interspersed with the need for complex, intense, high performance computation. Asynchronous operation is highly suited to digital signal processing as it has the ability to switch rapidly between idleness and full computing power with negligible hardware or software overhead. It offers power efficient computing as power is consumed only while useful work is done, and the unsynchronised circuit switching lowers electromagnetic interference.

Two applications are being investigated within the portfolio award. The first is high performance, power efficient function units for use in a DSP. The core of the function units is the multiply-accumulate design which has a combined multiplier-adder structure whose logic and circuit design have been power optimised. Currently, full custom data paths are being assembled using two types of pass transistor logic families. It is planned to place these in a surrounding test framework which will then be submitted for fabrication later in 2004. A period of evaluation will then follow.

The second project concerns the design and implementation of an asynchronous Viterbi decoder aimed at portable optical storage devices. The decoder will help recover read channel data despite interference. To enable it to deal with both current and future storage systems, a key feature will be the ability to program the decoder's characteristics for a particular application. This work is expected to be an active area of research throughout the period of the portfolio award.

Asynchronous System Design (The Balsa Synthesis System):

Balsa is an asynchronous circuit synthesis system developed over a number of years at the APT group. It is built around the Handshake Circuits methodology and can generate gate level net-lists from high-level descriptions in the Balsa language. Both dual-rail (QDI) and single-rail (bundled data) circuits can be generated.

The approach adopted by Balsa is that of syntax-directed compilation into communicating handshaking components and closely follows the Tangram system of Philips. The advantage of this approach is that the compilation is transparent: there is a one-to-one mapping between the language constructs in the specification and the intermediate handshake circuits that are produced. It is relatively easy for an experienced user to envisage the architecture of the circuit that results from the original description. Incremental changes made at the language level result in predictable changes at the circuit implementation level. This is important if optimisations and design-tradeoffs are to be made easily and contrasts with a VHDL description in which small changes in the specification may make radical alterations to the resulting circuit (indeed it is possible to describe systems in VHDL that are not synthesisable at all!).

Chip-Multiprocessors

The purpose of this research is to investigate new chip multi-processor architectures made possible by advances in hardware technology but to couple this with recent developments in language implementation technology, particularly dynamic compilation and program transformation guided by runtime information. Our belief is that modern high level parallel compilation techniques can produce significant advances when applied in a dynamic environment to the issues of chip multi-processor parallelism. In turn, dynamic compilation can benefit greatly from run-time feedback provided directly by the hardware. It is essential therefore that an integrated system approach is taken. Although many of the techniques which we propose to investigate are largely language independent, it is necessary to choose a practical environment in which to perform experiments. We propose to use Java mainly because there are existing dynamic compilation approaches which can be extended and adapted.

Large-Scale Neural-Networks:

The group also has interests in hardware support for large-scale neural networks. The goal here is to develop a System-on-Chip that can be used to form a multi-chip system capable of simulating a billion spiking neurons in real time. The dynamical properties of large systems of spiking neurons are complex and not well-understood, and it is hoped that this work will yield an experimental environment in which these properties can be investigated at a larger scale than is possible with current software and hardware models. The results should be of interest both to computational cognitive scientists and to neuroscientists.