Article Concerning The AMULET Group

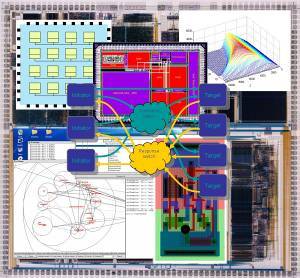

Date: Mon, 19 Dec 1994 16:12:47 GMT From:more@power.globalnews.com To: mclarke@world.std.com Subject: 3064 CHIPS WITHOUT TICKS - WHY NOT DO AWAY WITH PROCESSOR CLOCKS? CHIPS WITHOUT TICKS - WHY NOT DO AWAY WITH PROCESSOR CLOCKS? (December 16th 1994) The world of the microprocessor is one buzzing with innovation right? Well, maybe, but a few days at the Microprocessor Forum could have had you feeling that the industry was in something of a rut. Faster clock speeds, and increased degrees of parallelism are the staples of the chip makers; evolution, rather than revolution. So what about the prospects for something really different? There's optical computing of course, and Intel and Hewlett-Packard's collaboration, which is thought to be based upon Very Long Instruction Words (VLIW), but that seems about it. However, while the rest of the world cranks up clock speeds further and further, a few groups are experimenting with processors with no clocks at all. These "asynchronous processors" do away with the idea of having a single central clock keeping the chip's functional units in step. Instead, each part of the processor, the arithmetic units, the branch units etc all work at their own pace, negotiating with each other whenever data needs to be passed between them. Today's computers are clock-based for good reasons - for a start it makes them easier to build. A central clock means that all the components crank together and form a deterministic whole. So it is relatively easy to verify a synchronous design, and consequently to ensure that the chip will work under all circumstances. By comparison, verifying an asynchronous design, with each part working at its own pace, is pretty nightmarish. However clocks too are proving to have their problems. As the clocks get faster, the chips get bigger and the wires get finer. As a result of this it becomes increasingly difficult to ensure that all parts of the processor are ticking along in step with each other. Even though the electrical clock pulses are travelling at a substantial fraction of the speed of light, the delays in getting from one side of a small piece of silicon to the other can be enough to throw the chip's operation out. Steve Furber, ICL Professor of Computer Engineering at the University of Manchester in the UK, points out various other shortcomings. One of the most interesting is the realisation that the whole of the conventionally clocked chip has to be slowed right down so that the most sluggish function of the most sluggish functional unit doesn't get left behind. To take a simple example; how long does it take for an arithmetic unit to add two numbers? The answer is, it depends on what the two numbers are. In rare cases additions can slow down quite dramatically due to the pattern of bits generated and the way that the carry-bits have to be handled. Faced with this problem the conventional designer has two choices. He or she can throw in some extra circuitry to try and speed up these slow special cases, or alternatively just shrug and slow everything down to take account of the lowest common denominator. Either way the result is that resources are wasted or the chip's speed is determined by an instruction that may hardly ever be executed. It would be much more sensible if the chip only became more sluggish when a tricky operation was encountered. In addition, conventional processors are becoming increasingly power-hungry. Today's highest-end chips, like DEC's Alpha and the PowerPC 620 give off around 20 to 30 Watts in normal operation. Furber extrapolates current trends to show that by the turn of the millennium, if we were to continue to use 5V supplies, we could expect to see a 0.1 micron processor dissipating 2kW. Of course, we won't be using 5V at 0.1 micron, but reducing the supply to 3V, and then 2V, only reduces the 2 kW to 660W and then 330W, which are still very high dissipation levels. He also argues that one of the shortcomings of the conventional clocked processor is that many of the logic-gates in the chip are forced to switch states simply because they are being driven by the clock, and not because they are doing any useful work. CMOS gates only consume power when they switch, so removing the clock removes the unnecessary power consumption. This March, a team lead by Furber succeeded in building a completely asynchronous version of Advanced RISC Machines' ARM processor - the chip that powers Apple's Newton. The work leant heavily on funding from the European Commission's Esprit Open Microprocessor Systems Initiative and the result is claimed to be the world's first asynchronous implementation of a commercial processor - it runs standard ARM binaries. In building the chip, the team had to overcome some formidable problems. Number one was the lack of suitable design tools. Today's chip-makers rely heavily on computer-aided design to help them lay out their silicon. These tools can indicate whether a design will function in the way intended and can perform quite complex tasks like spotting and deleting redundant circuitry. Unfortunately, they screw-up when applied to asynchronous processors where the criteria of "good design" are different. Take, for example, the problem of "race hazards" and what happens when a signal gets ever so slightly delayed in a chip. Ironically, timing can be more critical within an asynchronous chip than in a conventionally clocked one. Imagine a logic element on a chip with two inputs and one output. If the data to one of the inputs arrives infinitesimally later than the data to the other input, the output will be momentarily wrong. On a conventional chip this doesn't matter, as long as the glitch has gone by the time the next clock cycle comes around all will be well. But on an asynch chip... oh dear - no clock, and nothing to differentiate between glitch and data. The problem can be overcome by adding a couple of extra transistors to the gate design, but yes, you guessed it - conventional IC design tools will try to remove them as being redundant. At a higher level, there are other timing problems. It is quite possible for a badly designed processor to deadlock, with one functional unit waiting for data from another, unaware that it will never come. Conceptually, it is the same kind of problem that bedevils anyone writing parallel software for multiprocessor machines. Guaranteeing that the processor never dies in this way is tricky. Although you can run simulations, they only establish that the chip fails to freeze under the simulated conditions and not that it is totally freeze-free. The upside is that deadlocks make it easy to spot exactly when and where in the processor a problem occurred. Furber points out that when a clocked design hits similar problems it will simply grind on to the end of its run, spitting out an incorrect answer so that trying to identify exactly where the problem occurred is even more problematic. The resultant asynchronous ARM chip, dubbed AMULET1 is still a little rough around the edges; compared to a conventional ARM6 it is bigger, uses more transistors and draws more power. Bearing in mind that ARM6 is the fourth iteration of a chip based upon well-known RISC principles, that is hardly surprising. The design of AMULET2 is virtually complete, with silicon expected towards the middle of next year. Meanwhile, the existing chip shows some novel properties, in particular an ability to degrade gracefully in performance terms as voltage is decreased and the temperative changes - the test chips worked correctly between -50C and 120C, running more slowly as the temperature was increased. So, will asynchronous processors become the next big thing? Even their best friends are cautious. Furber's best guess is the asynchronous techniques will find their way into certain niches, in particular he cites embedded applications such as CD error correctors, where the work required is extremely bursty, or something like the Apple Newton, where power-saving requirements make the approach attractive. It is also possible that we will see mix 'n match chips, mainly clocked, but with some asynchronous parts. This is the approach that Advanced RISC Machines itself is considering, with the company's engineering director suggesting that "complete functional blocks, like a multiplier possibly, may go asynchronous in a couple more product generations." Meanwhile Philips Research labs in the Netherlands is looking at the technology for digital signal processing and Ivan Sutherland, the doyen of asynchronous technology has reportedly described an asynchronous implementation of the SPARC architecture. Today the industry has a massive cultural investment in clocked processors, both in terms of tools and training; and the only reason for companies to make painful cultural transitions is if significant technical advantages can be demonstrated. Furber admits quite openly that "we are still short of convincing demonstrations" of these advantages. Perhaps AMULET2 will fill the gap Moreover, as the returns to be gained from tweaking existing RISC designs begin to diminish, processor designers will no doubt continue to keep revisiting all kinds of esoteric technology in search of that elusive competitive edge. Who's to say that they don't decide to throw away their clocks? (C) PowerPC News - Free by mailing: add@power.globalnews.com